Description

Unleash industrial-scale AI performance with the Krambu HGX B300 DLC AI Factory Cluster, a turnkey supercomputing platform engineered for frontier-level workloads. This fully integrated 72-node cluster packs 576 NVIDIA B300 SXM GPUs, dual Intel Xeon 6776P processors per node, and over 216 TB of DDR5 memory, delivering extreme throughput for large-scale AI training, foundation models, scientific simulation, and high-performance analytics.

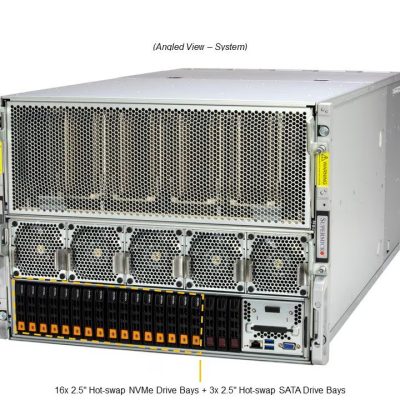

Each compute node combines 8 B300 GPUs, 3 TB of DDR5, high-density NVMe local storage, and NVIDIA InfiniBand XDR connectivity in a non-blocking fat-tree topology, ensuring maximum GPU-to-GPU bandwidth with zero compromise. The cluster is backed by a dedicated WEKA NVMe storage layer exceeding 1 PB of raw capacity, ultra-fast management and data planes powered by NVIDIA BlueField-3 and ConnectX-7 DPUs, and a high-bandwidth Ethernet fabric for storage, orchestration, and external connectivity.

Designed as a complete Scalable Unit with 8 compute racks and 4 management racks, this system delivers massive parallel compute while maintaining operational control, resilience, and predictable performance at scale. With an aggregate power envelope of approximately 1.3 MW, the Krambu HGX B300 DLC Cluster is purpose-built for organizations pushing the limits of AI, HPC, digital twins, climate modeling, genomics, and next-generation autonomous systems.